Image Source : Google images

1.0 Introduction

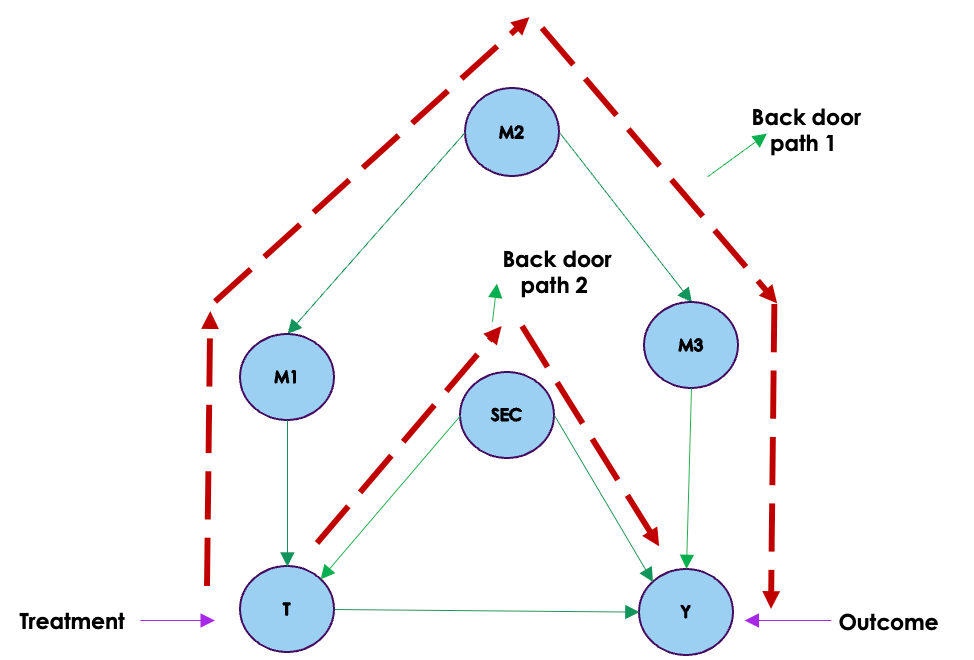

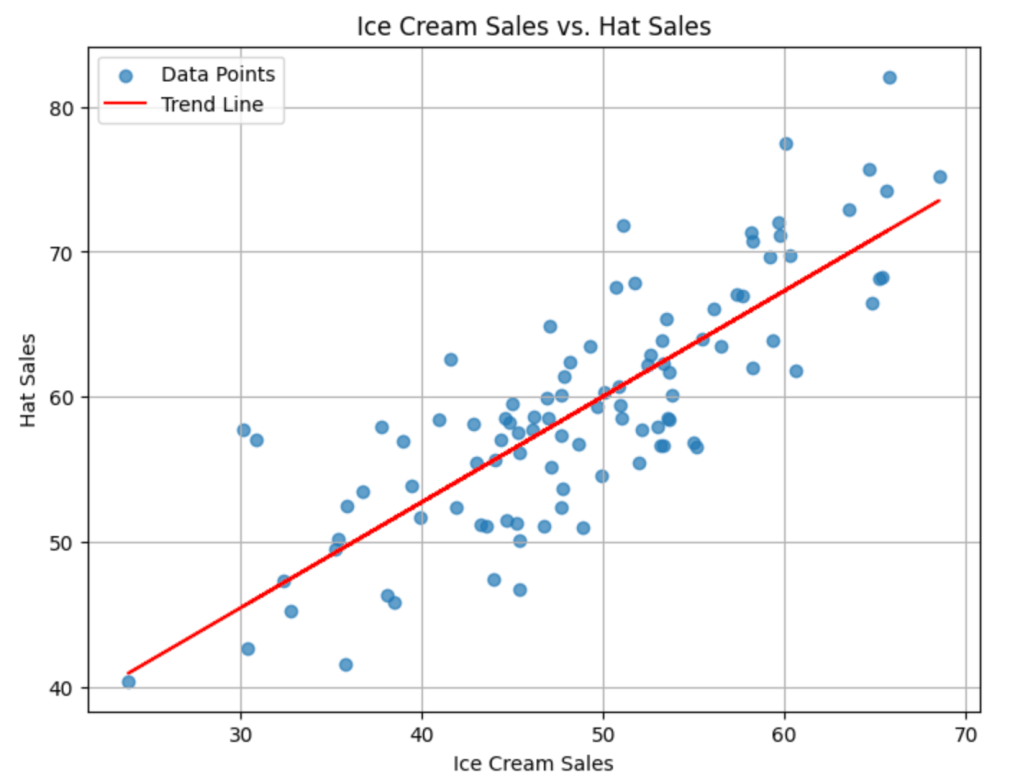

In the world of data science, uncovering cause-and-effect relationships from observational data can be quite challenging. In our earlier discussion, we dived into using linear regression for causal estimation, a fundamental statistical method. In this post, we introduce propensity score matching. This technique builds on linear regression foundations, aiming to enhance our grasp of cause-and-effect dynamics. Propensity score matching is crafted to deal with confounding variables, offering a refined perspective on causal relationships. Throughout our exploration, we’ll showcase the potency of propensity scores across various industries, emphasizing their pivotal role in the art of causal inference.

2.0 Structure

We will be traversing the following topics as part of this blog.

- Introduction to Propensity Score Matching ( PSM )

- Process for implementing PSM

- Propensity Score Estimation

- Matching Individuals

- Stratification

- Comparison and Causal Effect Estimation:

- PSM implementation from scratch using python

- Generating synthetic data and defining variables

- Training the propensity model using logistic regression

- Predicting the propensity scores for the data

- Separating treated and control variables

- Stratify the data using nearest neighbour algorithm to identify the neighbours for both treated and control groups

- Calculate Average Treatment Effect on Treated ( ATT ) and Average Treatment Effect on Control ( ATC ) .

- Calculation of ATE

- Practical examples of PSM in Retail, Finance, Telecom and Manufacturing

3.0 Introduction to Propensity Score Matching

Propensity score matching is a statistical method used to estimate the causal effect of a treatment, policy, or intervention by balancing the distribution of observed covariates between treated and untreated units. In simpler terms, it aims to create a balanced comparison group for the treated group, making the treatment and control groups more comparable.

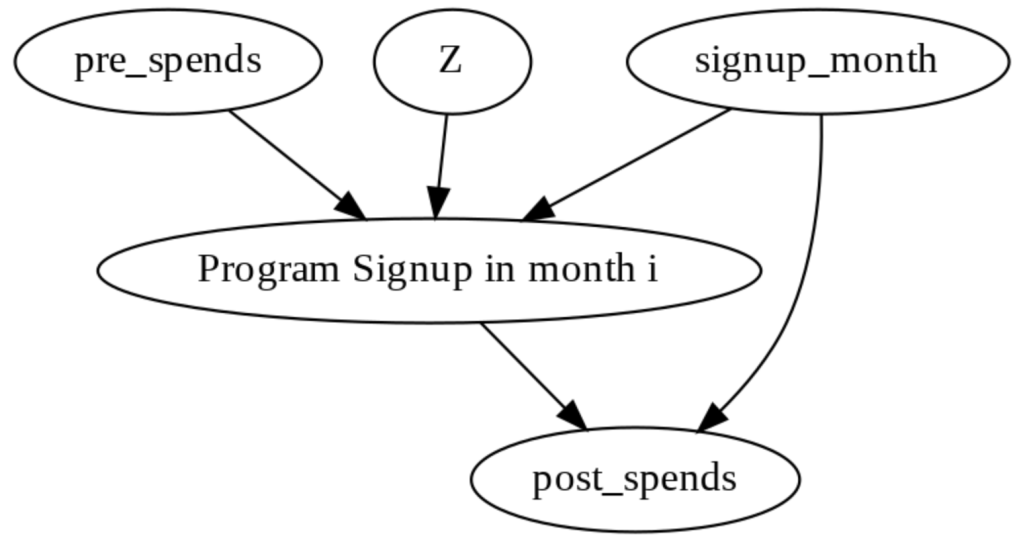

Consider a retail company evaluating the impact of a customer loyalty program on purchase behavior. The company wants to understand if customers enrolled in the loyalty program (treatment group) show a significant difference in their average purchase amounts compared to non-enrolled customers (control group). Covariates for these groups include customer demographics (age, income), historical purchase patterns, and frequency of interactions with the company.

To measure the the difference in purchase amounts between these two groups, we need to factor in the covariates in the group age, income, historical purchase patterns, and interaction frequency. This is because there can be substantial differences in the buying propensities between subgroups based on demographics like age or income. So to get a fair difference between these groups its imperative to make these groups comparable so that you can accurately measure the impact of the loyalty program.

The propensity score serves as a balancing factor. It’s like a ticket that each customer gets, representing their likelihood or “propensity” to join the loyalty program based on their observed characteristics (age, income, etc.). Propensity score matching then pairs customers from the treatment group (enrolled in the program) with similar scores to those from the control group (not enrolled). By doing this, you create comparable sets of customers, making it as if they were randomly assigned to either the treatment or control group.

4.0 Process to implement causal estimation using Propensity Score Matching ( PSM )

PSM is a meticulous process designed to balance the covariates between treated (enrolled in the loyalty program) and untreated (not enrolled) groups, ensuring a fair comparison that unveils the causal effect of the program on purchase behavior. Let’s delve into the steps of this approach, each crafted to enhance comparability and precision in our causal estimation journey.

4.1. Propensity Score Estimation: Unveiling Likelihoods

The journey commences with Propensity Score Estimation, employing a classification model like logistic regression to predict the probability of a customer enrolling in the loyalty program based on various covariates. The idea of the classification model is to capture the conditional probability of enrollment given the observed customer characteristics, such as demographics, historical purchase patterns, and interaction frequency.

The resulting propensity score (PS) for each customer represents the likelihood of joining the program, forming a key indicator for subsequent matching. Classification models like Logistic regression’s ability to discern these probabilities provides a nuanced understanding of enrollment likelihood, emphasizing the significance of each covariate in the decision-making process.

The PS serves as a foundational element in the Propensity Score Matching journey, guiding the way to a balanced and unbiased comparison between treated and untreated individuals.

4.2. Matching Individuals: Crafting Equivalence

Once we have the propensity scores, the next step is Matching Individuals. Think of it like finding “loyalty twins” for each customer – someone from the treatment group (enrolled in the loyalty program) matched with a counterpart from the control group (not enrolled), all based on similar propensity scores. The methods for this matching process vary, from simple nearest neighbor matching to more sophisticated optimal matching. Nearest neighbor matching pairs customers with the closest propensity scores, like pairing a loyalty member with a non-member whose characteristics align closely. On the other hand, optimal matching goes a step further, finding the best pairs that minimize differences in propensity scores. These methods aim for a quasi-random pairing, making sure our comparisons are as unbiased as possible. It’s like assembling pairs of customers who, based on their propensity scores, could have been randomly assigned to either group in the loyalty program evaluation.

4.3. Stratification: Ensuring Comparable Strata

Stratification is like sorting our matched customers into groups based on their propensity scores. This helps us organize the data and makes our analysis more accurate. It’s a bit like putting customers with similar likelihoods of joining the loyalty program into different buckets. Why? Well, it minimizes the chance of mixing up customers with different characteristics. For example, if we didn’t do this, we might end up comparing loyal customers who joined the program with non-loyal customers who have very different behaviors. Stratification ensures that, within each group, customers are pretty similar in terms of their chances of joining. It’s a bit like creating smaller, more manageable sets of customers, making our analysis more precise, especially when it comes to evaluating the impact of the loyalty program.

4.4. Comparison and Causal Effect Estimation: Unveiling Impact

Once we’ve organized our customers into strata based on propensity scores, we dive into comparing the mean purchase amounts of those who joined the loyalty program (treated, Y=1) and those who didn’t (untreated, Y=0) within each stratum.

Once the above is done we calculate the Average Causal Effect ( ACE ) for each stratum.

ACEs=Ys1−Ys0

This equation calculates the Average Causal Effect (ACE) for a specific stratum (s). It’s the difference between the mean purchase amount for treated ( Ys1 ) and untreated ( Ys0 ), individuals within that stratum.

Next we take the Weighted average of stratum specific ACE’s.

ACE = ∑s ACEs * Ws

Here, we take the sum of all stratum-specific ACEs, each multiplied by its proportion (ws) of individuals in that stratum. It gives us an overall Average Causal Effect (ACE) that considers the contribution of each stratum based on its size.

ACE measures how much the loyalty program influenced purchase amounts. If ACEs is positive, it suggests the program had a positive impact on purchases in that stratum. The weighted average considers stratum sizes, providing a comprehensive assessment of the program’s overall impact.

5.0 Implementing PSM from scratch

Let us now work out an example to demonstrate the concept of propensity scoring. We will generate some synthetic data and then develop the code to implement the estimation method using propensity score matching.

We will start by importing the necessary library files

import numpy as np

import pandas as pd

from sklearn.linear_model import LogisticRegression

from sklearn.neighbors import NearestNeighbors

from sklearn.preprocessing import StandardScaler

from sklearn.metrics import mean_absolute_error

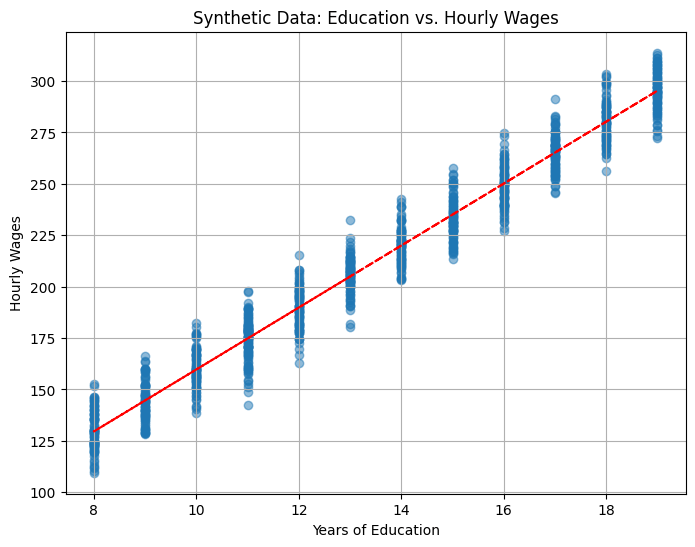

Next let us synthetically generate data for this exercise.

# Generate synthetic data

np.random.seed(42)

# Covariates

n_samples = 1000

age = np.random.normal(35, 5, n_samples)

income = np.random.normal(50000, 10000, n_samples)

historical_purchase = np.random.normal(100, 20, n_samples)

# Convert age as an integer

age = age.astype(int)

# Treatment variable (loyalty program)

treatment = np.random.choice([0, 1], n_samples, p=[0.7, 0.3])

# Outcome variable (purchase amount)

purchase_amount = 50 + 10 * treatment + 5 * age + 0.5 * income + 2 * historical_purchase + np.random.normal(0, 10, n_samples)

# Create a DataFrame

data = pd.DataFrame({

'Age': age,

'Income': income,

'HistoricalPurchase': historical_purchase,

'Treatment': treatment,

'PurchaseAmount': purchase_amount

})

data.head()

Let us look in detail on the above code

- Setting Seed:

np.random.seed(42)ensures reproducibility by fixing the random seed. - Generating Covariates:

age: Simulates ages from a normal distribution with a mean of 35 and a standard deviation of 5.income: Simulates income from a normal distribution with a mean of 50000 and a standard deviation of 10000.historical_purchase: Simulates historical purchase data from a normal distribution with a mean of 100 and a standard deviation of 20.

- Converting Age to Integer:

age = age.astype(int)converts the ages to integers. - Generating Treatment and Outcome:

treatment: Simulates a binary treatment variable (0 or 1) representing enrollment in a loyalty program. Thep=[0.7, 0.3]argument sets the probability distribution for the treatment variable.purchase_amount: Generates an outcome variable based on a linear combination of covariates and a random normal error term.

- Creating DataFrame:

data: Creates a pandas DataFrame with columns ‘Age,’ ‘Income,’ ‘HistoricalPurchase,’ ‘Treatment,’ and ‘PurchaseAmount’ using the generated data.

This synthetic dataset serves as a starting point for implementing propensity score matching, allowing you to compare the outcomes of treated and untreated groups while controlling for covariates.

Next let us define the variables for the propensity modelling step

# Step 1: Propensity Score Estimation (Logistic Regression)

X_covariates = data[['Age', 'Income', 'HistoricalPurchase']]

y_treatment = data['Treatment']

# Standardize covariates

scaler = StandardScaler()

X_covariates_standardized = scaler.fit_transform(X_covariates)

First we start by selecting the covariates (features) that will be used to estimate the propensity score. In this case, it includes ‘Age,’ ‘Income,’ and ‘HistoricalPurchase.’

After that we select the treatment variable. This variable represents whether an individual is enrolled in the loyalty program (1) or not (0).

After this we standardize the covariates using the fit_transform method. Standardization involves transforming the data to have a mean of 0 and a standard deviation of 1.

The standardized covariates will then used in logistic regression to predict the probability of treatment. This propensity score is a crucial component in propensity score matching, allowing for the creation of comparable groups with similar propensities for treatment. Let us see that part next

# Fit logistic regression model

propensity_model = LogisticRegression()

propensity_model.fit(X_covariates_standardized, y_treatment)

The logistic regression model is trained to understand the relationship between the standardized covariates and the binary treatment variable.This model learns the patterns in the covariates that are indicative of treatment assignment, providing a probability score that becomes a key element in creating balanced treatment and control groups.

In the next step, this model will be used in calculating probabilities, and in this case, predicting the probability of an individual being in the treatment group based on their covariates.

# Predict propensity scores

propensity_scores = propensity_model.predict_proba(X_covariates_standardized)[:, 1]

# Addd the propensity scores to the data frame

data['propensity'] = propensity_scores

data.head()

Here we utilize the trained logistic regression model (propensity_model) to predict propensity scores for each observation in the dataset. The predict_proba method returns the predicted probabilities for both classes (0 and 1), and [:, 1] selects the probabilities for class 1 (treatment group). After predicting the probabilities we create a new column in the DataFrame (data) named ‘propensity’ and populates it with the predicted propensity scores.

The propensity scores represent the estimated probability of an individual being in the treatment group based on their covariates. In the context of the problem, the “treatment” refers to whether an individual has enrolled in the loyalty program of the company. A higher propensity score for an individual indicates a higher estimated probability or likelihood that this person would enroll in the loyalty program, given their observed characteristics.

As seen earlier propensity score matching aims to create comparable treatment and control groups. Adding the propensity scores to the dataset allows for subsequent steps in which individuals with similar propensity scores can be paired or matched.

# Find the treated and control groups

treated = data.loc[data['Treatment']==1]

control = data.loc[data['Treatment']==0]

# Fit the nearest neighbour on the control group ( Have not signed up) propensity score

control_neighbors = NearestNeighbors(n_neighbors=1, algorithm="ball_tree").fit(control["propensity"].values.reshape(-1, 1))

This section of the code is aimed at finding the nearest neighbors in the control group for each individual in the treatment group based on their propensity scores. Let’s break down the steps and provide intuition in the context of the problem statement:

Line 45 creates a subset of the data where individuals have received the treatment (enrolled in the loyalty program) and line 46 creates a subset for individuals who have not received the treatment.

Line 49 uses the Nearest Neighbors algorithm to fit the propensity scores of the control group. It’s essentially creating a model that can find the closest neighbor (individual) in the control group for any given propensity score. The choice of n_neighbors=1 means that for each individual in the treatment group, the algorithm will find the single nearest neighbor in the control group based on their propensity scores.

In the context of the loyalty program, this step is pivotal for creating a balanced comparison. Each treated individual is paired with the most similar individual from the control group in terms of their likelihood of enrolling in the program (propensity score). This pairing helps to mitigate the effects of confounding variables and allows for a more accurate estimation of the causal effect of the loyalty program on purchase behavior.

We will see the step of matching people from treated and control group next

# Find the distance of the control group to each member of the treated group ( Individuals who signed up)

distances, indices = control_neighbors.kneighbors(treated["propensity"].values.reshape(-1, 1))

In the above code, the goal is to find the distances and corresponding indices of the nearest neighbors in the control group for each individual in the treated group.

Line 51, uses the fitted Nearest Neighbors model (control_neighbors) to find the distances and indices of the nearest neighbors in the control group for each individual in the treated group.

distances: This variable will contain the distances between each treated individual and its nearest neighbor in the control group. The distance is a measure of how similar or close the propensity scores are.indices: This variable will contain the indices of the nearest neighbors in the control group corresponding to each treated individual.

In propensity score matching, the objective is to create pairs of treated and control individuals with similar or close propensity scores. The distances and indices obtained here are crucial for assessing the quality of matching. Smaller distances and appropriate indices indicate successful pairing, contributing to a more reliable causal effect estimation.

Let us now see the process of causal estimation using the distance and indices we just calculated

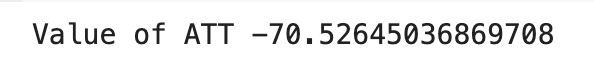

# Calculation of the Average Treatment effect on the Treated ( ATT)

att = 0

numtreatedunits = treated.shape[0]

print('Number of treated units',numtreatedunits)

for i in range(numtreatedunits):

treated_outcome = treated.iloc[i]["PurchaseAmount"].item()

control_outcome = control.iloc[indices[i][0]]["PurchaseAmount"].item()

att += treated_outcome - control_outcome

att /= numtreatedunits

print("Value of ATT",att)

The above code calculates the Average Treatment Effect on the Treated (ATT). Let us try to understand the intuition behind the above step.

Line 53, att : The ATT is a measure of the average causal effect of the treatment (loyalty program) on the treated group. It specifically looks at how the purchase amounts of treated individuals differ from those of their matched controls.

Line 54 : numtreatedunits: The total number of treated individuals.

Line 56, initiates a for loop to iterate through each of the treated individuals. For each treated individual, the difference between their observed purchase amount and the purchase amount of their matched control is calculated. The loop iterates through each treated individual, accumulating these differences in att.

A positive ATT indicates that, on average, the treated individuals had higher purchase amounts compared to their matched controls. This would suggest a positive causal effect of the loyalty program on purchase amounts.A negative ATT would imply the opposite, indicating that, on average, treated individuals had lower purchase amounts than their matched controls.

In our case the ATT is negative, indicating that on average treated individuals have lower purchase amount that their matched controls.

What these processes achieves is that by matching each treated individual with their closest counterpart in the control group (based on propensity scores), we create a balanced comparison, reducing the impact of confounding variables. The ATT represents the average difference in outcomes between the treated and their matched controls, providing an estimate of the causal effect of the loyalty program on purchase behavior for those who enrolled.

Next, similar to the ATT we calculate the Average treatment of Control ( ATC ). Let us look into those steps now.

# Computing ATC

treated_neighbors = NearestNeighbors(n_neighbors=1, algorithm="ball_tree").fit(treated["propensity"].values.reshape(-1, 1))

distances, indices = treated_neighbors.kneighbors(control["propensity"].values.reshape(-1, 1))

print(distances.shape)

# Calculating ATC from the neighbours of the control group

atc = 0

numcontrolunits = control.shape[0]

print('Number of control units',numcontrolunits)

for i in range(numcontrolunits):

control_outcome = control.iloc[i]["PurchaseAmount"].item()

treated_outcome = treated.iloc[indices[i][0]]["PurchaseAmount"].item()

atc += treated_outcome - control_outcome

atc /= numcontrolunits

print("Value of ATC",atc)

Similar to the earlier step, we calculate the average treatment effect on the control group ( ATC ). The ATC measures the average causal effect of the treatment (loyalty program) on the control group. It evaluates how the purchase amounts of control individuals would have been affected had they received the treatment.The loop iterates through each control individual, accumulating the differences between the purchase amounts of the treated individuals’ matched controls and the purchase amounts of the controls themselves.

While ATT focuses on the treated group, comparing the outcomes of treated individuals with their matched controls, ATC focuses on the control group. It assesses how the treatment would have affected the control group by comparing the outcomes of treated individuals’ matched controls with the outcomes of the controls.

Having calculated ATT and ATC its time to calculate Average treatment effect ( ATE ). This can be calculated as follows

# Calculation of Average Treatment Effect

ate = (att * numtreatedunits + atc * numcontrolunits) / (numtreatedunits + numcontrolunits)

print("Value of ATE",ate)

ATE represents the weighted average of the Average Treatment Effect on the Treated (ATT) and the Average Treatment Effect on the Control (ATC). It combines the effects on the treated and control groups based on their respective sample sizes. ATE provides an overall measure of the average causal effect of the treatment (loyalty program) on the entire population, considering both the treated and control groups.

The calculated ate value i.e ( -140.38 ) indicates that, on average, the treatment has a negative impact on the purchase amounts in the entire population when considering both treated and control groups. Negative ATE suggests that, on average, individuals who received the treatment have lower purchase amounts compared to what would have been observed if they had not received the treatment, considering the control group. This also suggests that the loyalty program has not had the desired effect and should rather be discontinued.

Conclusion

Propensity score matching is a powerful technique in causal inference, particularly when dealing with observational data. By estimating the likelihood of receiving treatment (propensity score), we can create balanced groups, ensuring that treated and untreated individuals are comparable in terms of covariates. The step-by-step process involves estimating propensity scores, forming matched pairs, and calculating treatment effects. Through this method, we mitigate the impact of confounding variables, enabling more accurate assessments of causal relationships.

This method has good uses within multiple domains. Let us look at some of the important use cases.

Retail: Promotional Campaign Effectiveness

- Treated Group: Customers exposed to a promotional campaign.

- Control Group: Customers not exposed to the campaign.

- Effects: Evaluate the impact of the promotional campaign on sales and customer engagement.

- Covariates: Demographics, purchase history, and online engagement metrics.

In retail, understanding the effectiveness of promotional campaigns is crucial for allocating marketing resources wisely. The treated group in this scenario consists of customers who have been exposed to a specific promotional campaign, while the control group comprises customers who have not encountered the campaign. The goal is to assess the influence of the promotional efforts on sales and customer engagement. To ensure a fair comparison, covariates such as demographics, purchase history, and online engagement metrics are considered. Propensity score matching helps mitigate the impact of confounding variables, allowing for a more precise evaluation of the promotional campaign’s actual impact on retail performance.

Finance: Loan Default Prediction

- Treated Group: Individuals who have received a specific type of loan.

- Control Group: Individuals who have not received that particular loan.

- Effects: Assess the impact of the loan on default rates and repayment behavior.

- Covariates: Credit score, income, employment status.

In the financial sector, accurately predicting loan default rates is crucial for risk management. The treated group here consists of individuals who have received a specific type of loan, while the control group comprises individuals who have not been granted that particular loan. The objective is to evaluate the influence of this specific loan on default rates and overall repayment behavior. To account for potential confounding factors, covariates such as credit score, income, and employment status are considered. Propensity score matching aids in creating a balanced comparison between the treated and control groups, enabling a more accurate estimation of the causal effect of the loan on default rates within the financial domain.

Telecom: Customer Retention Strategies

- Treated Group: Customers who have been exposed to a specific customer retention strategy.

- Control Group: Customers who have not been exposed to any such strategy.

- Effects: Evaluate the effectiveness of customer retention strategies on reducing churn.

- Covariates: Contract length, usage patterns, customer complaints.

In the telecommunications industry, where customer churn is a significant concern, assessing the impact of retention strategies is vital. The treated group comprises customers who have experienced a particular retention strategy, while the control group consists of customers who have not been exposed to any such strategy. The aim is to examine the effectiveness of these retention strategies in reducing churn rates. Covariates such as contract length, usage patterns, and customer complaints are considered to control for potential confounding variables. Propensity score matching enables a more rigorous comparison between the treated and control groups, providing valuable insights into the causal effects of different retention strategies in the telecom sector.

Manufacturing: Production Process Optimization

- Treated Group: Factories that have implemented a new and optimized production process.

- Control Group: Factories continuing to use the old production process.

- Effects: Evaluate the impact of the new production process on efficiency and product quality.

- Covariates: Factory size, workforce skill levels, historical production performance.

In the manufacturing domain, continuous improvement is crucial for staying competitive. To assess the impact of a newly implemented production process, factories using the updated method constitute the treated group, while those adhering to the old process make up the control group. The effects under scrutiny involve measuring improvements in efficiency and product quality resulting from the new production process. Covariates such as factory size, workforce skill levels, and historical production performance are considered during the analysis to account for potential confounding factors. Propensity score matching aids in providing a robust causal estimation, enabling manufacturers to make data-driven decisions about process optimization.

Propensity score matching stands as a powerful tool allowing researchers and decision-makers to glean insights into the true impact of interventions. By carefully balancing treated and control groups based on covariates, we unlock the potential to discern causal relationships in observational data, overcoming confounding variables and providing a more accurate understanding of cause and effect. This method has widespread applications across diverse industries, from retail and finance to telecom, manufacturing, and marketing.

“Dive into the World of Data Science Magic: Subscribe Today!”

Ready to uncover the wizardry behind data science? Our blog is your spellbook, revealing the secrets of causal inference. Whether you’re a data enthusiast or a seasoned analyst, our content simplifies the complexities, making data science a breeze. Subscribe now for a front-row seat to unraveling data mysteries.

But wait, there’s more! Our YouTube channel adds a visual twist to your learning journey.

Don’t miss out—subscribe to our blog and channel to become a data wizard.

Let the magic begin!